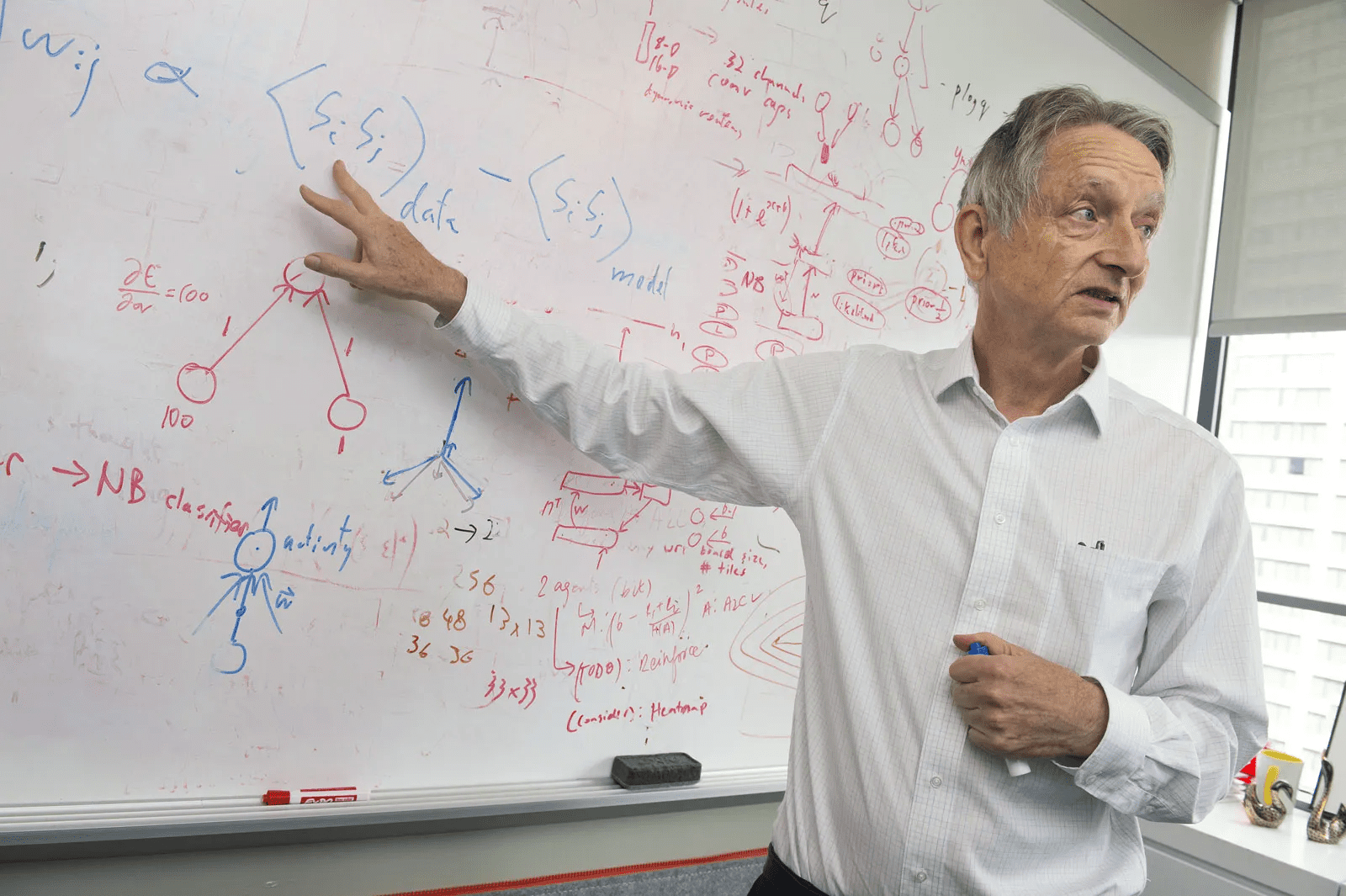

Geoffrey Hinton is a British-Canadian computer scientist and psychologist celebrated for his contributions to the field of artificial intelligence (AI). His most notable research includes the development of machine learning (ML) algorithms designed to uncover complex structures within high-dimensional data sets. Hinton was part of the team that introduced the backpropagation algorithm and was the first to use it for word insertion tasks. His other notable contributions to neural network research include Boltzmann machines, distributed representations, time-delay neural networks, variational learning, products of experts, and deep belief networks. His research team at the University of Toronto achieved groundbreaking advances in deep learning, revolutionizing speech recognition and object classification. Read more on toronto.name.

Family Ties and Education

Hinton was born on December 6, 1947, and grew up in England. He has strong familial ties to prominent intellectuals, including mathematician Mary Everest, logician George Boole, surgeon James Hinton, and surveyor George Everest. From 1967 to 1970, Hinton studied at Cambridge University, exploring various fields such as physiology, physics, and philosophy before earning a degree in experimental psychology. Briefly working as a carpenter, he began a PhD in artificial intelligence at Edinburgh University in 1972, the only graduate program on the subject in the UK at the time.

Hinton completed his PhD in 1975 but officially received the degree in 1978. His research focused on neural networks, structures designed to mimic the human brain. Neural networks were an unpopular field in AI during the 1970s, and Hinton’s advisor, Christopher Longuet-Higgins, often urged him to pursue a different approach.

Auction Involvement and Professional Roles

After earning his PhD, Hinton held research positions at the University of Sussex, University of California, San Diego, the Medical Research Council (MRC), and Carnegie Mellon University before becoming a professor at the University of Toronto in July 1987.

Except for a three-year stint at University College London (1998–2001), Hinton remained at the University of Toronto, eventually earning the title of emeritus professor. In 2012, Hinton and his students Alex Krizhevsky and Ilya Sutskever won the ImageNet competition, a prestigious contest to create the most accurate image recognition AI. They formed a company, DNNresearch, to auction their expertise to major tech firms, including Google, Microsoft, Baidu, and DeepMind. Hinton chose Google and joined Google Brain in March 2013, splitting his time between the company and the University of Toronto until May 2023, when he left Google, citing concerns about AI’s impact.

In 2018, the Association for Computing Machinery (ACM) awarded Hinton, Yoshua Bengio, and Yann LeCun the Turing Award for their breakthroughs that made deep neural networks a critical component of modern computing.

Hinton’s Achievements

Hinton is a Fellow of the Royal Society, the Royal Society of Canada, and the Association for the Advancement of Artificial Intelligence. He is an honorary foreign member of the American Academy of Arts and Sciences and the National Academy of Engineering, as well as a past president of the Cognitive Science Society. He holds honorary doctorates from the Universities of Edinburgh, Sussex, and Sherbrooke.

Hinton’s accolades include the David E. Rumelhart Prize (2001), the IJCAI Award for Research Excellence (2005), the Killam Prize for Engineering (2012), the IEEE James Clerk Maxwell Gold Medal (2016), and the NSERC Herzberg Gold Medal (2010), Canada’s highest honor in science and engineering.

A Breakthrough in Science

Hinton was elected to the Royal Society in 1998. By that time, he had co-authored a seminal paper with David Rumelhart and Ronald Williams on backpropagation—a technique for training artificial neural networks that became known as the “missing mathematical piece” necessary for advancing machine learning. Backpropagation allowed neural networks to autonomously improve their performance over time rather than relying on manual adjustments.

This method is fundamental to chatbots used by millions daily, as they rely on neural network architectures trained on massive text datasets to interpret prompts and generate responses. ChatGPT, for example, acknowledges backpropagation as a “key breakthrough,” enabling it to refine its parameters for more accurate predictions over time.

Sources: